5分钟阅读

对标gpt4o开源社区最近有哪些动作?(未完成)

标题 :【AI辅助设计】对标gpt-4o开源社区最近有哪些动作?

前言

Gpt-4o 发布了已经过了两周了,我们也发了两期文章讲述其能力和使用以及针对甚嚣尘上的“是否取代 ComfyUI “等话题提出了我们的见解。感兴趣的朋友可以回顾一下:

回到今天的话题,开源社区最近有哪些动作,是可以对标 gpt-4o 的呢? 经过我的全网手机收集,发现了两个值得关注的技术。

- VARGPT-v1.1。开源的类似 gpt-4o 的自回归生图模型。

- EasyControl。此技术已经发布有一段时间了,最近新出了一个玩疯的吉卜力风格 LoRA,效果接近 gpt-4o

不过很遗憾的是,这两个技术对硬件要求非常高!🥱。一般消费显卡是应付不来的了。我们期待一下其量化版本。今天还是介绍一下吧,权当先了解信息,保持 AI 信息的同步!

VARGPT-v 1.1

VARGPT-v1.1: Improve Visual Autoregressive Large Unified Model via Iterative Instruction Tuning and Reinforcement Learning

Xianwei Zhuang1* Yuxin Xie1* Yufan Deng1* Dongchao Yang2

Liming Liang1 Jinghan Ru1 Yuguo Yin1 Yuexian Zou 1

1 Peking University, 2 The Chinese University of Hong Kong

VARGPTv1-1.mp4

News

-

[2025-04-7] The technical report is released at https://arxiv.org/pdf/2504.02949.

-

[2025-04-2] We release the more powerful unified model of VARGPT-v1.1 (7B+2B) at VARGPT-v1.1 and the editing model datasets at VARGPT-v1.1-edit. 🔥🔥🔥🔥🔥🔥

-

[2025-04-1] We release the training (SFT and RL), inference and evaluation code of VARGPT-v1.1 and VARGPT at VARGPT-family-training for multimodal understanding and generation including image captioning, visual question answering (VQA), text-to-image generation and visual editing. 🔥🔥🔥🔥🔥🔥

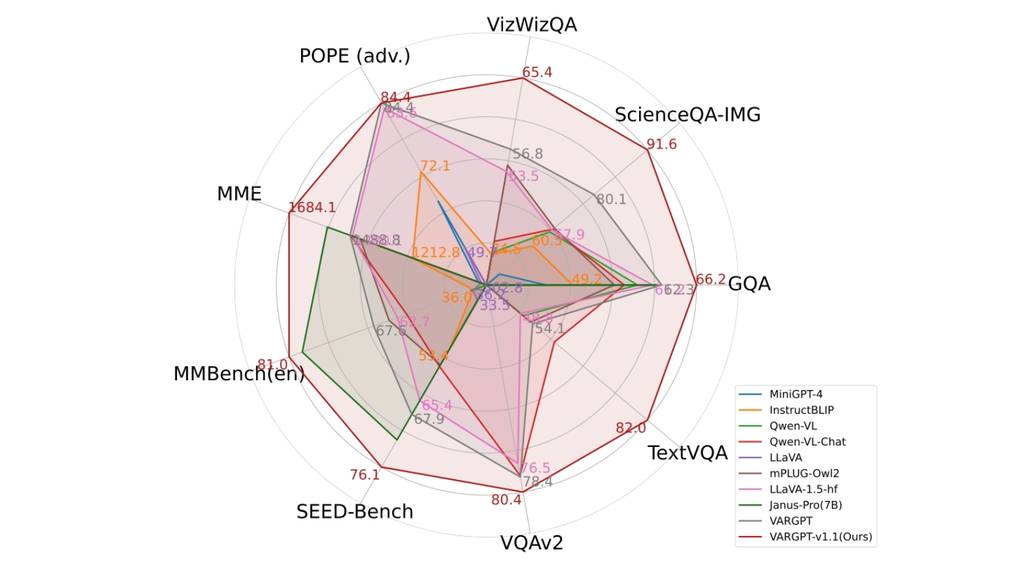

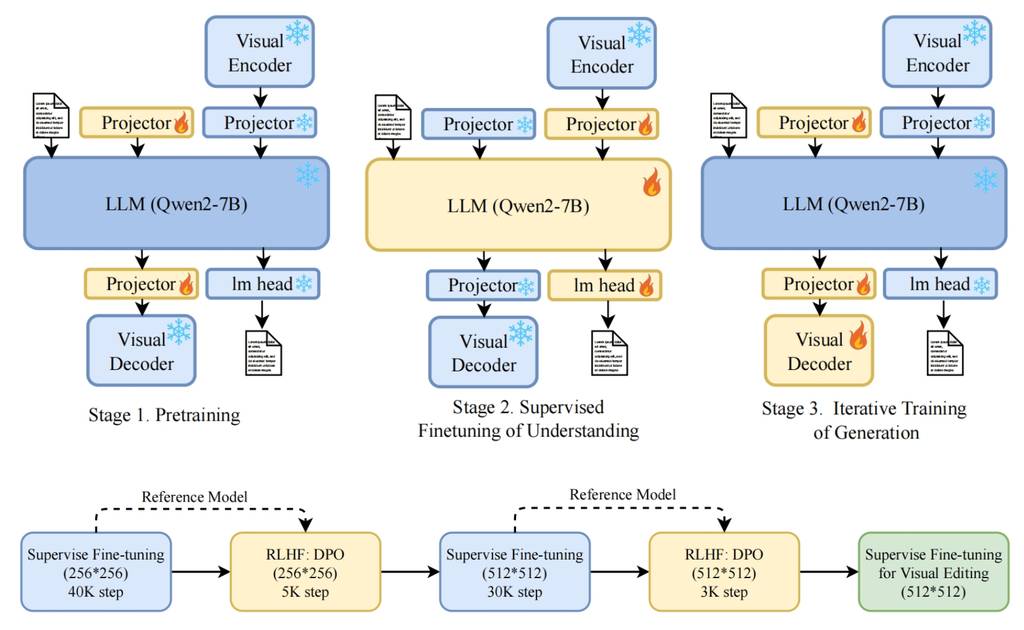

What is the new about VARGPT-v1.1?

Compared with VARGPT, VARGPT-v1.1 has achieved comprehensive capability improvement. VARGPT-v1.1 integrates: (1) a novel training strategy combining iterative visual instruction tuning with reinforcement learning through Direct Preference Optimization (DPO), (2) an expanded training corpus containing 8.3M visual-generative instruction pairs, (3) an upgraded language backbone using Qwen2, (4) enhanced image generation resolution, and (5) emergent image editing capabilities without architectural modifications.

TODO

- Release the inference code.

- Release the code for evaluation.

- Release the model checkpoint.

- Supporting stronger visual generation capabilities.

- Release the training datasets.

- Release the training code.

- Release the technical report.

EasyControl

Implementation of EasyControl

EasyControl: Adding Efficient and Flexible Control for Diffusion Transformer

Yuxuan Zhang, Yirui Yuan, Yiren Song, Haofan Wang, Jiaming Liu

Tiamat AI, ShanghaiTech University, National University of Singapore, Liblib AI

Features

- Motivation: The architecture of diffusion models is transitioning from Unet-based to DiT (Diffusion Transformer). However, the DiT ecosystem lacks mature plugin support and faces challenges such as efficiency bottlenecks, conflicts in multi-condition coordination, and insufficient model adaptability.

- Contribution: We propose EasyControl, an efficient and flexible unified conditional DiT framework. By incorporating a lightweight Condition Injection LoRA module, a Position-Aware Training Paradigm, and a combination of Causal Attention mechanisms with KV Cache technology, we significantly enhance model compatibility (enabling plug-and-play functionality and style lossless control), generation flexibility (supporting multiple resolutions, aspect ratios, and multi-condition combinations), and inference efficiency.

News

- 2025-03-12: ⭐️ Inference code are released. Once we have ensured that everything is functioning correctly, the new model will be merged into this repository. Stay tuned for updates! 😊

- 2025-03-18: 🔥 We have released our pre-trained checkpoints on Hugging Face! You can now try out EasyControl with the official weights.

- 2025-03-19: 🔥 We have released huggingface demo! You can now try out EasyControl with the huggingface space, enjoy it!

|  |

| Example 1 | Example 2 |

- 2025-04-01: 🔥 New Stylized Img2Img Control Model is now released!! Transform portraits into Studio Ghibli-style artwork using this LoRA model. Trained on only 100 real Asian faces paired with GPT-4o-generated Ghibli-style counterparts, it preserves facial features while applying the iconic anime aesthetic.

|  |

| Example 3 | Example 4 |

-

2025-04-03: Thanks to jax-explorer, Ghibli Img2Img Control ComfyUI Node is supported!

-

2025-04-07: 🔥 Thanks to the great work by CFG-Zero* team, EasyControl is now integrated with CFG-Zero*!! With just a few lines of code, you can boost image fidelity and controllability!! You can download the modified code from this link and try it.

|  |  |

| Source Image | CFG | CFG-Zero* |

Installation

We recommend using Python 3.10 and PyTorch with CUDA support. To set up the environment:

# Create a new conda environment

conda create -n easycontrol python=3.10

conda activate easycontrol

# Install other dependencies

pip install -r requirements.txt

Download

You can download the model directly from Hugging Face. Or download using Python script:

from huggingface_hub import hf_hub_download

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/canny.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/depth.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/hedsketch.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/inpainting.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/pose.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/seg.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/subject.safetensors", local_dir="./")

hf_hub_download(repo_id="Xiaojiu-Z/EasyControl", filename="models/Ghibli.safetensors", local_dir="./")

If you cannot access Hugging Face, you can use hf-mirror to download the models:

export HF_ENDPOINT=https://hf-mirror.com

huggingface-cli download --resume-download Xiaojiu-Z/EasyControl --local-dir checkpoints --local-dir-use-symlinks False

写在最后

最后,让我们期待一下开源社区的进步,尽快让我们能够更加便捷和低成本获取到技术带来的便利。